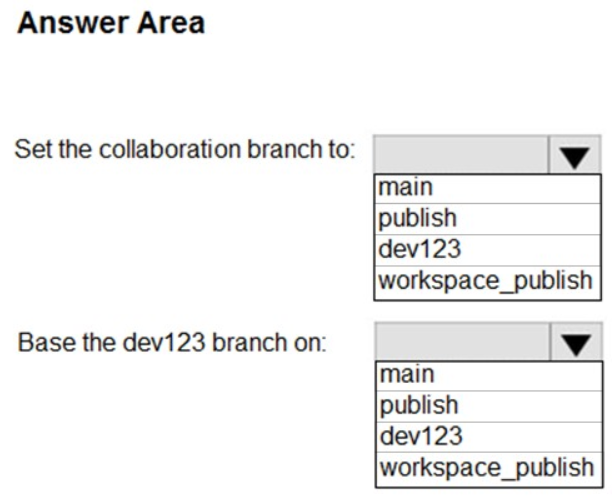

HOTSPOT (Drag and Drop is not supported)

You need to configure a source control solution for Azure Synapse Analytics. The solution must meet the following requirements:

-Code must always be merged to the main branch before being published, and the main branch must be used for publishing resources.

-The workspace templates must be stored in the publish branch.

-A branch named dev123 will be created to support the development of a new feature.

What should you do? To answer, select the appropriate options in the answer area.

NOTE: Each correct selection is worth one point.

- See Explanation section for answer.

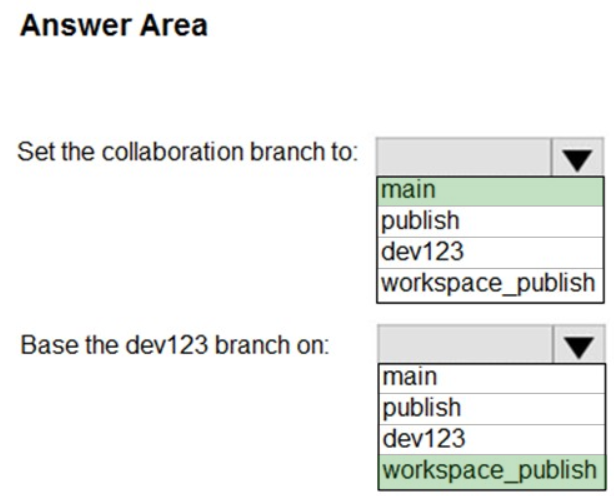

Answer(s): A

Explanation:

Box 1: main

Code must always be merged to the main branch before being published, and the main branch must be used for publishing resources.

Collaboration branch - Your Azure Repos collaboration branch that is used for publishing. By default, its master. Change this setting in case you want to publish resources from another branch. You can select existing branches or create new.

Each Git repository that's associated with a Synapse Studio has a collaboration branch. (main or master is the default collaboration branch).

Box 2: workspace_publish

A branch named dev123 will be created to support the development of a new feature.

The workspace templates must be stored in the publish branch.

Creating feature branches

Users can also create feature branches by clicking + New Branch in the branch dropdown.

By default, Synapse Studio generates the workspace templates and saves them into a branch called workspace_publish. To configure a custom publish branch, add a publish_config.json file to the root folder in the collaboration branch.

Reference:

https://docs.microsoft.com/en-us/azure/synapse-analytics/cicd/source-control